Big Data: Bad science on steroids?

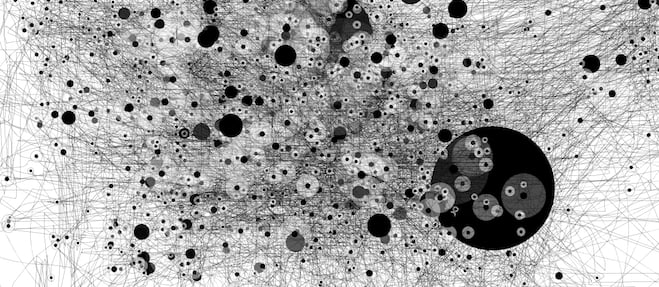

Experts struggle with how to tell the signal from the noise

Share

In case you didn’t realize, we’re living in the era of big data. From sequencing the molecules of human life to divine our futures, to capturing fodder on Twitter to predict disease outbreaks, big data’s potential is massive. It’s the new black gold, after all, and it can cure cancer, transform business and government, foretell political outcomes, even deliver TV shows we didn’t know we wanted.

Or can it? Despite these big promises, the research community isn’t sold. Some say vast data collections—often user-generated and scraped from social media platforms or administrative databases—are not as prophetic or even accurate as they’ve been made out to be. Some big data practices are downright science-ish. Here’s why:

Low signal-noise ratio

Let’s start with genome sequencing, an example of micro level big data capture. A newly published commentary in the journal Nature argued that, for most common diseases, screening people’s genomes is excessive and ineffective. That’s because, so far, genetic differences have been poor predictors of most diseases in most people. For example, with obesity or type-2 diabetes, many folks who have a genetic variant don’t get the disease or condition, while others do. If we started screening everybody, we’d have too many false positives and negatives to make such an exercise worthwhile.

The commentary’s lead author, Dr. Muin Khoury of the Centers for Disease Control and Prevention in the U.S., told Science-ish that the problem with big data genomics is that it’s hard to tell the signal from the noise. In the past, researchers working with smaller data sets would have a relatively modest number of statistically significant associations that were the result of chance. “In the genome era, when people started looking at millions of variants in a data set, they lowered their signal-noise ratio.” In other words, the bigger the data, the more opportunity to find correlations—that may or may not actually tell us something real.

Nassim Taleb, the author of Antifragile, does a good job here of explaining why bogus statistical relationships may be more common in the big data era, pointing out the parallel challenges with observational studies in health research. “In observational studies, statistical relationships are examined on the researcher’s computer. In double-blind cohort experiments, however, information is extracted in a way that mimics real life. The former produces all manner of results that tend to be spurious more than eight times out of 10.”

Hypothesis-free science-ish

Big data also goes hand-in-hand with “hypothesis-free science,” which some say departs from scientific principles. Instead of starting with a hypothesis and working out which data you would need to test it, researchers cast around for associations in data sets that are already available. Dr. Helen Wallace (PhD), director of the genetic science public interest group GeneWatch, put it this way: “If you don’t have a good scientific hypothesis about what causes a disease, you’ll probably end up measuring the wrong data in the first place.”

Screening the genome of everyone at birth, for example, seeks out the genetic basis for disease but doesn’t account for things like environmental exposures or lifestyle factors, which may actually be important predictors of sickness. She likens this to forecasting the weather by only measuring the temperature with a thermometer. “You would miss out on other key weather predictors, like barometric pressure.”

Big quality?

There are also questions about the integrity of big data. A recent article on Google Flu Trends showed that the online tracker massively overestimated the year’s flu season. For Rumi Chunara, a researcher who works on the big data infectious disease surveillance project HealthMap, it comes down to the quality of the data. “Sometimes you can find relationships, things that come up that could be happenstance, and could be confounded by something you’re not paying attention to,” she said.

The Google Flu Trends misfire may have been caused by this year’s hysteria in the media around influenza. People read the headlines about the deadly flu season and hit the Internet for information. Google Flu Trends calculated those extra searches as reflecting actual flu sufferers, when they were actually a big media feedback loop.

Similarly, HealthMap does not capture every infectious disease around the world—only the ones that are reported in the news media. This limits tracking to events that are picked up by media outlets in the 12 languages HealthMap’s algorithms are designed to detect. So, even though researchers are constantly tweaking their algorithms to limit error, infectious disease trends can be more media construct than reality.

Still, Chunara made a key point: “You have issues with every kind of data set.” That’s certainly true when it comes to official flu-tracking mechanisms. She sees big data as an adjunct to other more traditional approaches, not something that will supplant them. “We’re not going to be able to match some gold standard but we need to think about what aspects these new data sets bring,” she said. “These methods provide information our traditional methods don’t.” And they’ll do it faster.

It’ll take time for the scientific community to recalibrate its methods for the big data era. For now, just be wary of bad science on steroids.

Science-ish is a joint project of Maclean’s, the Medical Post and the McMaster Health Forum. Julia Belluz is the senior editor at the Medical Post. Got a tip? Message her at [email protected] or on Twitter @juliaoftoronto