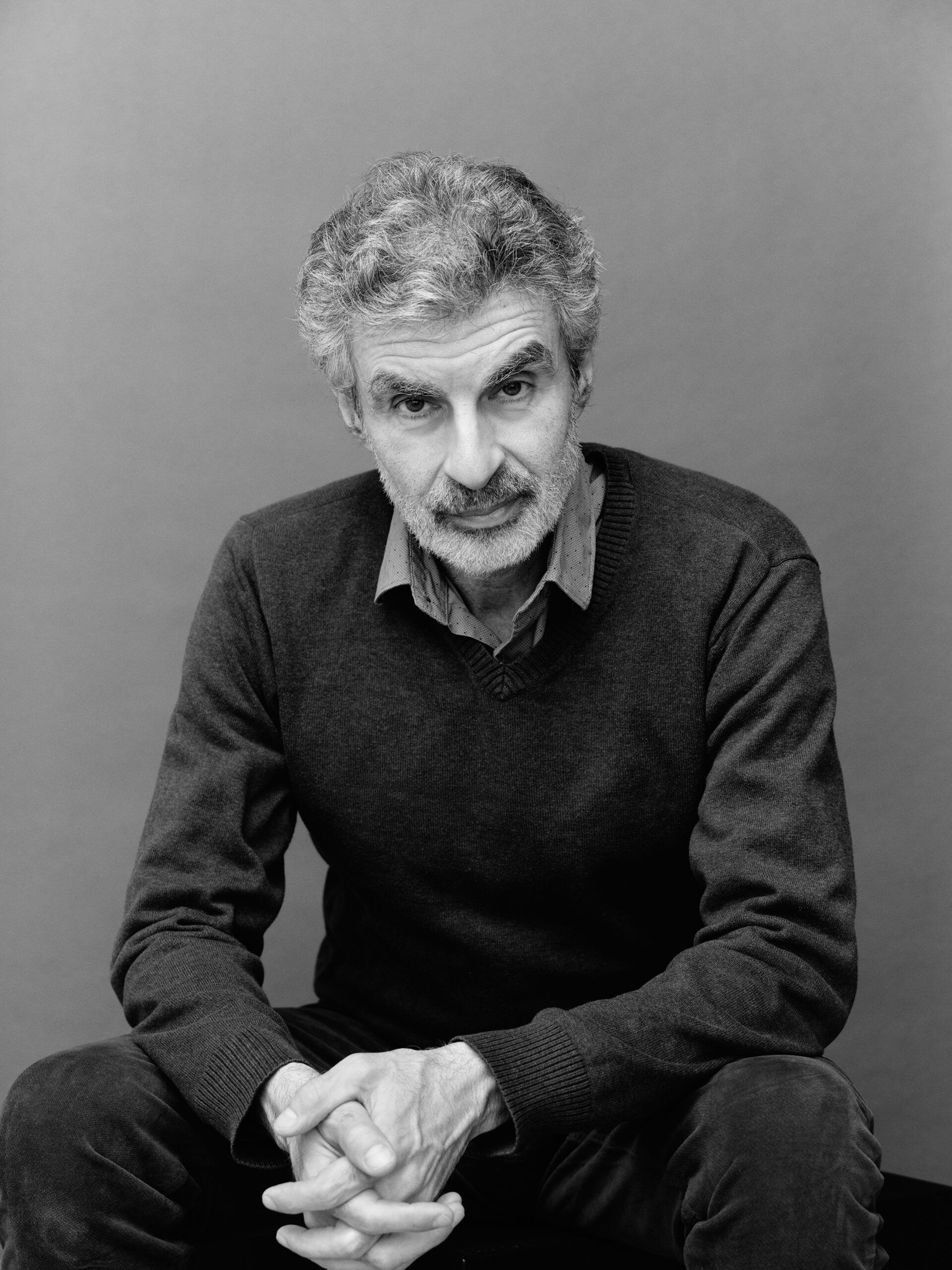

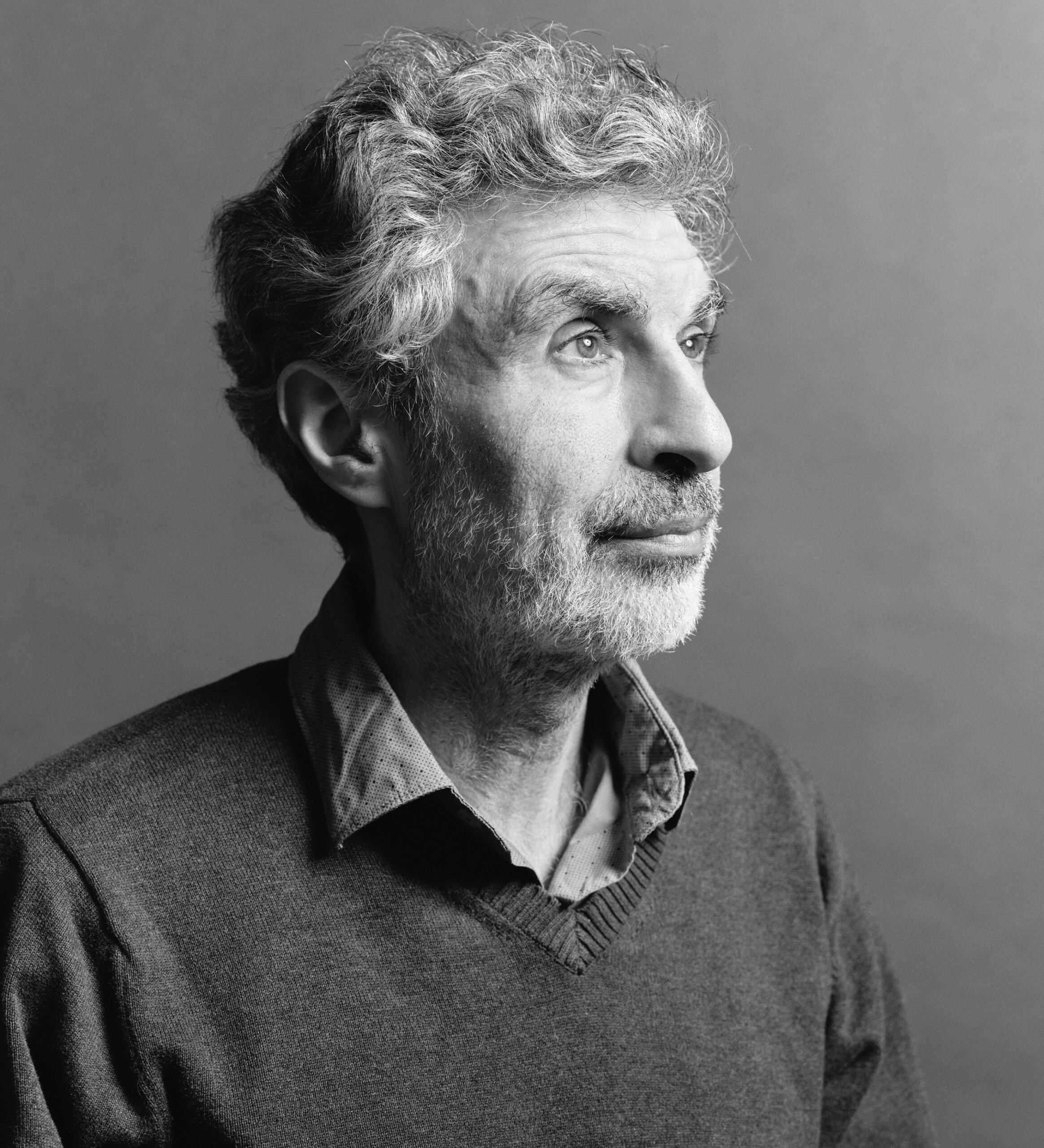

The Power List: Yoshua Bengio

Bengio, who holds our No. 1 spot in AI, unleashed an age-defining innovation. Now he’s trying to tame it.

Share

April 1, 2024

When ChatGPT, DALL-E and other generative AI products went mainstream in 2022, some people thought the sky was falling. The technology could wipe out millions of jobs, make creativity a thing of the past, even destroy democracy, they said. For Geoffrey Hinton and Yoshua Bengio, two of the Canadian scientists whose pioneering research made those tools possible, the news was even more dire. Generative AI, they argued, was a harbinger of future artificial general intelligence—AI that’s capable of doing any cognitive task that we can. Soon enough, AGI would become superintelligent and, left unchecked, could spell the end of humanity. Bad actors could weaponize AI, or even more catastrophically, AI could weaponize itself.

Both Hinton, who formerly ran Google Brain Toronto, and Bengio, the founder and scientific director of MILA (previously the Montreal Institute for Learning Algorithms), now spend much of their time warning the world about AI armageddon. As modern-day Oppenheimers, they’ve both received a ton of attention, good and bad, but Bengio’s emerged as our best hope of staving off apocalypse. Wisely, he sees the AI problem as political and scientific, and has committed himself to solving both sides. At MILA, Bengio completely recalibrated his research in order to correct a fatal flaw in AI’s design: our inability to control it. “Right now, there’s no guarantee that AI won’t turn against humans,” he says. “There are strong theoretical arguments that it will turn against us if we continue in the current direction.”

The tricky thing, Bengio argues, is that the only way we know how to train AI is by teaching them to respond to, and maximize, rewards. That would be fine if, metaphorically speaking, AI were a dog or a cat. But the reality, he says, is that future AI will be more like a grizzly bear, entirely focused on getting its fish, no matter who or what is in the way. Bengio is aiming to build some “epistemic humility” into AI. (In other words, teaching the tech that it doesn’t always know the right answer and should therefore proceed with caution.) It’s a highly complex and technical task; in February, he issued a call-out for more researchers.

Bengio believes tech companies and scientists themselves would also benefit from some of that humility. Now 60, he’s surprisingly self-effacing for someone often dubbed a “godfather” of AI and a recipient of the Turing Award—the Nobel Prize of computing. In the face of enormous existential threat, he says, modesty, responsibility and open-mindedness are necessary values. “The most difficult thing for me, psychologically,” Bengio says, “is having unproductive debates with colleagues who are my friends, who won’t consider what they think is speculation and what I think is cautious reasoning about the future.” Even more worrisome are the people he encounters who would prefer to see humanity replaced by superintelligent AI. “They think that intelligence is a supreme value,” he says. “They’d make it easier for the bear to get out of its cage.”

Humbling AI is easier said than done, of course, and Bengio isn’t sure when we’ll get there. We don’t know how much time we have before the superintelligent iteration arrives. (Bengio’s best guess ranges from a few years to a couple of decades.) What he does know is that, in order to make AI safer, governments and corporations need to immediately invest more resources in funding research and creating rigorous regulatory frameworks. This is the political prong of the problem. Do governments appreciate the dangers of AI, and are they doing enough to mitigate them? “I could say no and no,” Bengio says, “but the reality is much more complicated. I think there’s been an amazing shift toward yes.”

That shift involves a number of recent developments, including the creation of the UNESCO Recommendation on the Ethics of Artificial Intelligence and the EU AI Act. According to Bengio, national security staff and advisers, used to grappling with nuclear proliferation and pandemics, understand AI’s potential threat the best. But, for better and worse, AI has already disrupted many industries, and future harms—like widespread disinformation and lethal autonomous weapons—could arrive long before superintelligence.

Bengio has, accordingly, picked up the pace, and is now on pretty much every global leader’s speed dial. He works with the Global Partnership on Artificial Intelligence, sitting on a committee that advises UN Secretary General António Guterres. Last July, Bengio testified before the U.S. Senate, where he argued for badly needed international collaboration. Our efforts, he said, should be “on par with such past efforts as the space program or nuclear technologies,” to make sure we reap AI’s benefits while protecting our futures. Currently, Bengio is chairing a report commissioned durty the U.K.’s AI Safety Summit, which will be to artificial intelligence what the annual IPCC report is to climate change. “I’m trying to focus on what can be done to move the needle in the right direction,” he says. “I’ve always been a positive person.”

This story appears in the May issue of Maclean’s. You can buy the issue here or subscribe to the magazine here.